Top Machine Learning and Artificial Intelligence Tools everyone should use

The tools of choice for data scientists are machine learning (ML) and Artificial Intelligence (AI) software. There is a wealth of articles listing reliable ML and AI tools with in-depth descriptions of functionality. We have tried to add some insights into the process by including feedback from our experiences. We have tried to answer 5 major questions in precise points below with somewhat detailed descriptions later in the article. Take a look and happy learning!

- Which are the most popular machine learning languages?

- Python

- R

- C++

- What are the top data analytics and visualization tools for AI?

- Pandas

- Matplotlib

- Jupyter notebook

- Tableau

- Name the best frameworks for general machine learning?

- NLTK

- SciKit-Learn

- NumPy

- Which are the best Machine Learning frameworks for Neural Network modelling?

- TensorFlow

- TensorBoard

- PyTorch

- Keras

- Caffe2

- What are the top Big Data tools?

- MemSQL

- Apache Spark

Details descriptions of the questions follow:

1. The most popular machine learning languages:

Python: It is a very popular language with high-quality machine learning and data analysis libraries: Python is a general-purpose language favored for its readability, good structure, and a relatively mild learning curve which continues to gain popularity. According to the Annual Developer Survey by Stack Overflow in January 2018, Python can be called the fastest-growing major programming language. It’s ranked the seventh most popular language (38.8 percent), and now is one step ahead of C# (34.4 percent).

Head of research in Respeecher Grant Reaber, who specializes in deep learning applied to speech recognition, uses Python as “almost everyone currently uses it for deep learning”

Co-founder of the NEAR.AI startup who previously managed a team in Google Research on deep learning also sticks with Python: “Python was always a language of data analysis, and, over the time, became a de-facto language for deep learning with all modern libraries built for it.”

One of the use cases of Python machine learning is model development and particularly prototyping.

Facebook AI researcher Denis Yarats notes that this language has an amazing toolset for deep learning like PyTorch framework or NumPy library.

C++: a middle-level language used for parallel computing on CUDA: C++ is a flexible, object-oriented, statically-typed language based on the C programming language. The language remains popular among developers thanks to its reliability, performance, and the large number of application domains it supports. Another application of this language is the development of drivers and software that can directly interact with hardware under real-time constraints. And since C++ is clean enough for the explanation of basic concepts, it’s used for research and teaching.

Data scientists use this language for diverse yet specific tasks.

Andrii Babii, a senior lecturer at the Kharkiv National University of Radioelectronics (NURE), uses C++ for parallel implementations of algorithms on CUDA, an Nvidia GPU compute platform, to speed up applications based on those algorithms.

R: A language for statistical computing and graphics: R, a language and environment for statistics, visualizations, and data analysis, is a top pick for data scientists. It’s another implementation of the S programming language.

R and libraries written in it provide numerous graphical and statistical techniques like classical statistical tests, linear and nonlinear modeling, time-series analysis, classification, clustering, and etc. We can easily extend the language with R machine learning packages. The language allows for creating high-quality plots, including formulae and mathematical symbols.

2. Data analytics and visualization tools:

Pandas: A Python data analysis library enhancing analytics and modelling: Wes McKinney, a data science expert, developed this library to make data analysis and modeling convenient in Python. Prior to pandas, this programming language worked well only for data preparation and munging.

pandas simplifies analysis by converting CSV, JSON, and TSV data files or a SQL database into a data frame, a Python object looking like an Excel or an SPSS table with rows and columns.

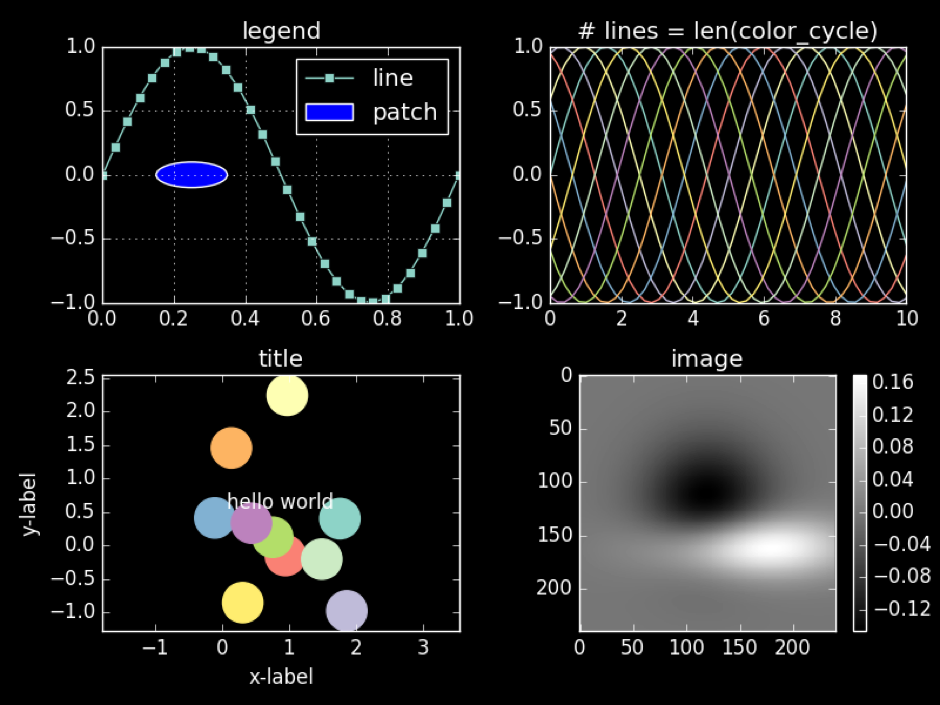

Matplotlib: A Python machine learning library for quality visualizations: Matplotlib is a Python 2D plotting library and originates from MATLAB: Its developer John D. Hunter emulated plotting commands from Mathworks’ MATLAB software.

While written mostly in Python, the library is extended with NumPy and other code, so it performs well even when used for large arrays.

It allows for generating production-quality visualizations with a few lines of code.

Data science practitioners note Matplotlib’s flexibility and integration capabilities are far ahead of others.

Denis Yarats (Facebook AI Research) says he chooses matplotlib mostly because it’s integrated well into the Python toolset and can be used with the NumPy library or PyTorch machine learning framework.

Jupyter notebook: collaborative work capabilities: The Jupyter Notebook is a free web application for interactive computing. With it, users can create and share documents with live code, develop and execute code, as well as present and discuss task results. A document can be shared via Dropbox, email, GitHub, and Jupyter Notebook Viewer, and it can contain graphics and narrative text.

The notebook is rich in functionality and provides various use scenarios. It can be integrated with numerous tools, such as Apache Spark, pandas, and TensorFlow. It supports more than 40 languages, including R, Scala, Python, and Julia. Besides these capabilities, Jupyter Notebook supports container platforms — Docker and Kubernetes.

Illia Polosukhin from NEAR.AI shares that he uses Jupyter Notebook mostly for custom ad-hoc analysis: “The application allows for doing any data or model analysis quickly, with the ability to connect to a kernel on a remote server. You can also share a resulting notebook with colleagues.”

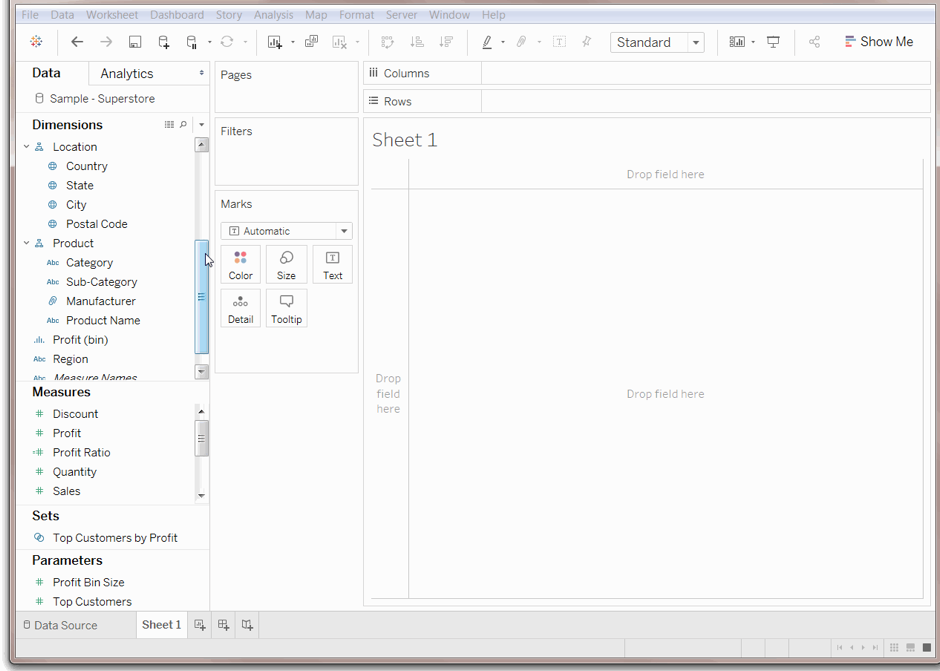

Tableau: powerful data exploration capabilities and interactive visualization: Tableau is a data visualization tool used in data science and business intelligence. A number of specific features make this software efficient for solving problems in various industries and data environments.

Through data exploration and discovery, Tableau software quickly extracts insights from data and presents them in understandable formats. It doesn’t require excellent programming skills and can be easily installed on all kinds of devices. While a little script must be written, most operations are done by drag and drop.

Tableau supports real-time analytics and cloud integration (i.e. with AWS, Salesforce, or SAP), allows for combining different datasets and centralized data management.

The simplicity of use and its set of features are the reasons data scientists choose this tool.

3. Frameworks for general machine learning

NumPy: an extension package for scientific computing with Python: Previously mentioned NumPy is an extension package for performing numerical computing with Python that replaced NumArray and Numeric. It supports multidimensional arrays (tables) and matrices. ML data is represented in arrays. And a matrix is a two-dimensional array of numbers. NumPy contains broadcasting functions as tools for integrating C/C++ and the Fortran code. Its functionality also includes the Fourier transform, linear algebra, and random number capabilities.

Data science practitioners can use NumPy as an effective container for storage of multidimensional generic data. Through the ability to define arbitrary data types, NumPy easily and quickly integrates with numerous kinds of databases.

scikit-learn: easy-to-use machine learning framework for numerous industries: scikit-learn is an open source Python machine learning library build on top of SciPy (Scientific Python), NumPy, and matplotlib. Initially started in 2007 by David Cournapeau as a Google Summer of Code project, scikit-learn is currently maintained by volunteers. As of today, 1,092 people have contributed to it.

scikit-learn provides users with a number of well-established algorithms for supervised and unsupervised learning. Data science practitioner Jason Brownlee from Machine Learning Mastery notes that the library focuses on modeling data but not on its loading, manipulation, and summarization.

4. ML frameworks for neural network modelling

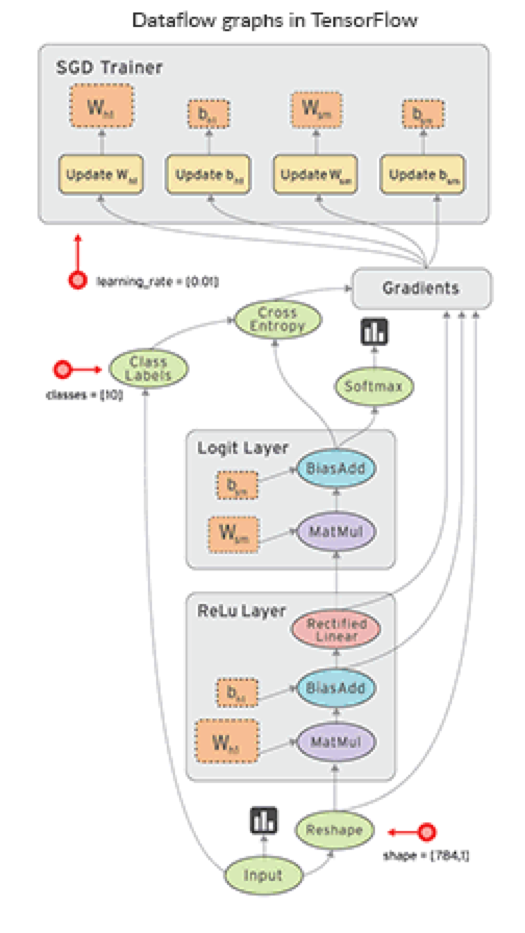

TensorFlow: flexible framework for large-scale machine learning: TensorFlow is an open source software library for machine learning and deep neural network research developed and released by the Google Brain Team within Google’s AI organization in 2015.

A significant feature of this library is that numerical computations are done with data flow graphs consisting of nodes and edges. Nodes represent mathematical operations, and edges are multidimensional data arrays or tensors, on which these operations are performed.

TensorFlow is flexible and can be used across various computation platforms (CPUs, GPUs, and TPUs) and devices, from desktops to clusters of servers to mobile and edge systems. TensorFlow is rich in development tools, particularly for Android.

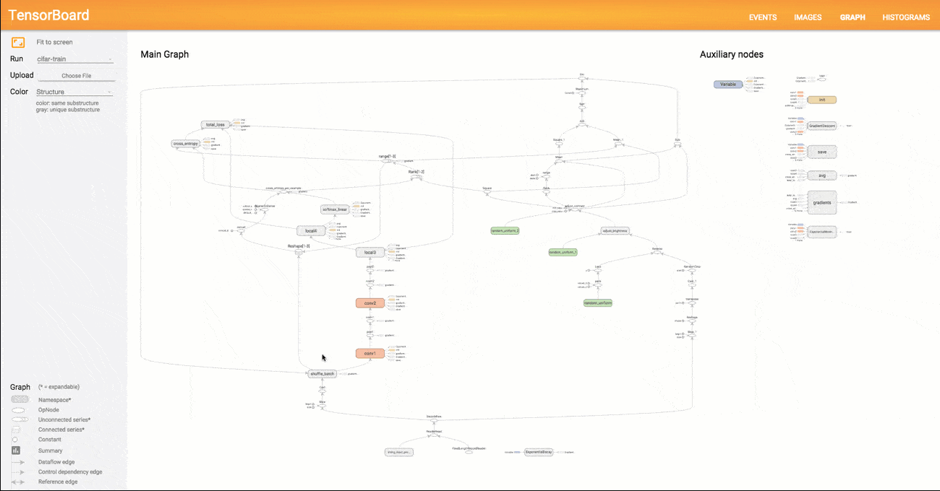

TensorBoard: A good tool for model training visualization: TensorBoard is a suite of tools for graphical representation of different aspects and stages of machine learning in TensorFlow. TensorBoard reads TensorFlow event files containing summary data (observations about a model’s specific operations) being generated while TensorFlow is running.

A model structure shown with graphs allows researchers to make sure model components are located where needed and are connected correctly.

With the graph visualizer, users can explore different layers of model abstraction, zooming in and out of any part of the schema. Another important benefit of TensorBoard visualization is that nodes of the same types and similar structures are painted with the same colors. Users can also look at coloring by device (CPU, GPU, or a combination of both), highlight a specific node with the “trace inputs” feature, and visualize one or several charts at a time.

This visualization approach makes TensorBoard a popular tool for model performance evaluation, especially for models of complex structures like deep neural networks.

PyTorch: easy to use tool for research: PyTorch is an open source machine learning framework for deep neural networks that supports and accelerates GPUs. Developed by Facebook’s team together with engineers from Twitter, SalesForce, NRIA, ENS, ParisTech, Nvidia, Digital Reasoning, and INRIA, the library was first released in October 2016. PyTorch is built on Torch framework, but unlike the predecessor that’s written in Lua, it supports commonly used Python.

A dynamic computational graph is one of the features making this library popular. In most frameworks like TensorFlow, Theano, CNTK, and Caffe, the models are built in a static way. A data scientist must change the whole structure of the neural network — rebuild it from scratch — to change the way it behaves. PyTorch makes it easier and faster. The framework allows for changing the network behavior arbitrarily without lag or overhead.

Keras: A lightweight, easy-to-use library for fast prototyping: Keras is a Python deep learning library capable of running on top off Theano, TensorFlow, or CNTK. The Google Brain team member Francois Chollet developed it to give data scientists the ability to run machine learning experiments fast.

The library can run on GPU and CPU. Fast prototyping is possible with the help of the library’s high-level, understandable interface, the division of networks into sequences of separate modules that are easy to create and add. According to data scientists, the speed of modelling is one of the strengths of this library.

5. Big data tools

Apache Spark: the tool for distributed computing: Using Apache Spark for big data processing is like driving a Ferrari: It’s faster, more convenient, and allows for exploring more within the same amount of time compared to a regular car. It is a distributed open-source cluster-computing framework that’s usually equipped with its in-memory data processing engine. This engine’s functionality includes ETL (Extract, Transform, and Load), machine learning, data analytics, batch processing, and stream processing of data.

MemSQL: a database designed for real-time applications: MemSQL is the distributed in-memory SQL database platform for real-time analytics. It ingests and analyzes streaming data and runs petabyte scale queries to enable the work of real-time applications like instant messengers, online games, or community storage solutions. MemSQL supports queries for relational SQL, Geospatial, or JSON data.